![]() Brillig

Understanding, Inc.

Brillig

Understanding, Inc.

ChatScript is a control platform for structuring one's interactions with an LLM and all other sensory and body simulation mechanisms. It has transcended its origins as a mere conversational device and is now a way to have safe interactions with AI of all forms.

We craft systems that understand meaning

Robots, chatbots, virtual assistants

We have the most powerful technology out there and we know how to use it.

We make conversational chatbots as well as information query bots.

Siri is not a conversational bot; she is merely an information query bot.

Our bots carry on conversations, have personality, have a history, a family, friends.

Our future goals include all types of natural language systems and planners.

Brillig mission statement

Our aim is to always understand what is said to us and by us. So we can talk to you. We can read text, recall facts from it and incorporate them into the flow of conversation.

Comparing with Machine Learning as a technology.

Comparing with Large Language Models as a technology.

How does Brillig differ from the Gorillas using Machine Intelligence?

Our projects are faster to develop, cost less, involve less research and more production, and do what you want straight away - no learning involved. Plus everything we do involves personality. No one would accuse Siri or Alexa or Cortana of having a real personality, much less carrying on a conversation. Whereas we've won the Loebner Prize for machine conversation 4 of the last 6 years – most human-like chatbots. We believe that conversation without personality is boring and doesn't work. Conversation is an exchange of information, which means having a "person" on each side. We make characters. They can be an appealing teenage cat, a learned professor, the Big Bad Wolf with asthma, a genii who does your bidding, a sexy English tutor, a toy robot dog scared of water, or a Star Wars alien who gets drunk on clean water. We can even make You.

From an interview Bruce gave for a technology website...

Q: What role do you see for your work, or what can be learned from your work, in developing systems that do not only aim to entertain but to really retrieve information and/or carry out instructions accurately based on a dialogue in natural language? Aside from your work, what future do you see for this type of system? Can you say something about psychological insights that you have gained from studying these human/machine conversations? Can you comment something on the ethics of creating artificial personalities - humans have been known to attach emotionally even to things like bomb disposal robots, what about artificial characters that are purposefully made as human-like as possible? Why do you expect speech based interfaces to replace graphic ones? What are some of the major challenges remaining to solve in natural language processing? Could you say something on where the border lies between a machine actually understanding and using natural language, and merely giving an illusion of it? And will your work help bring on the AI apocalypse?

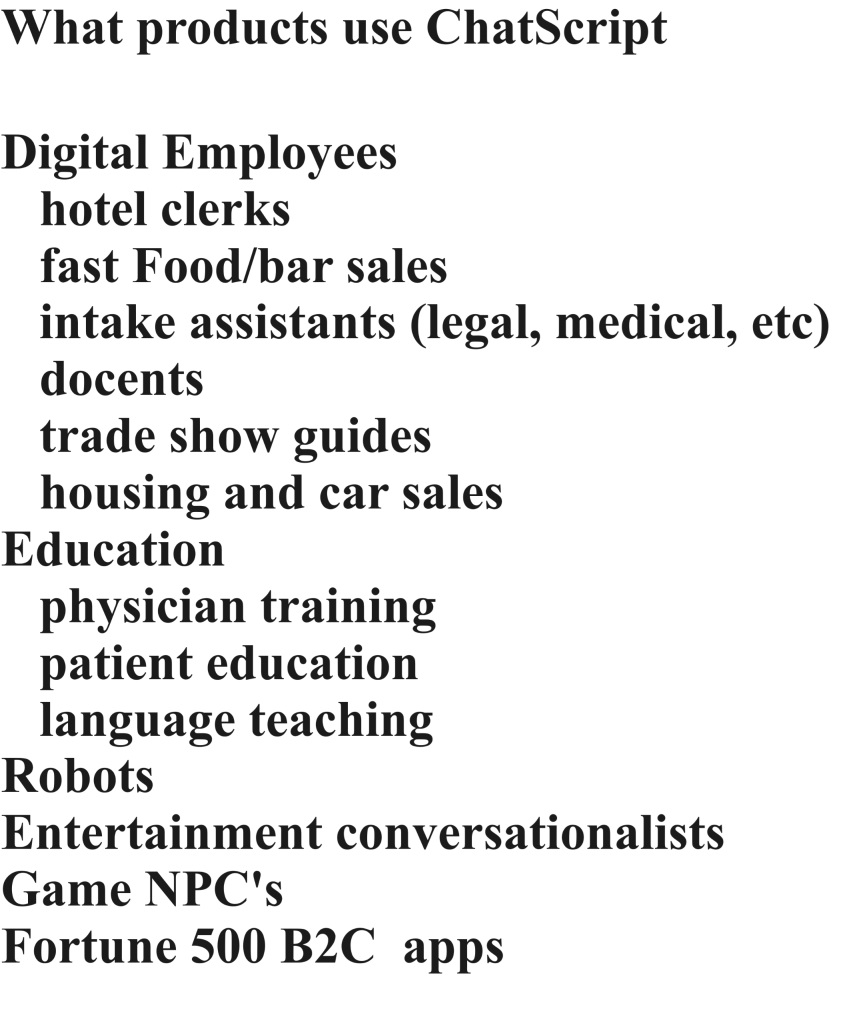

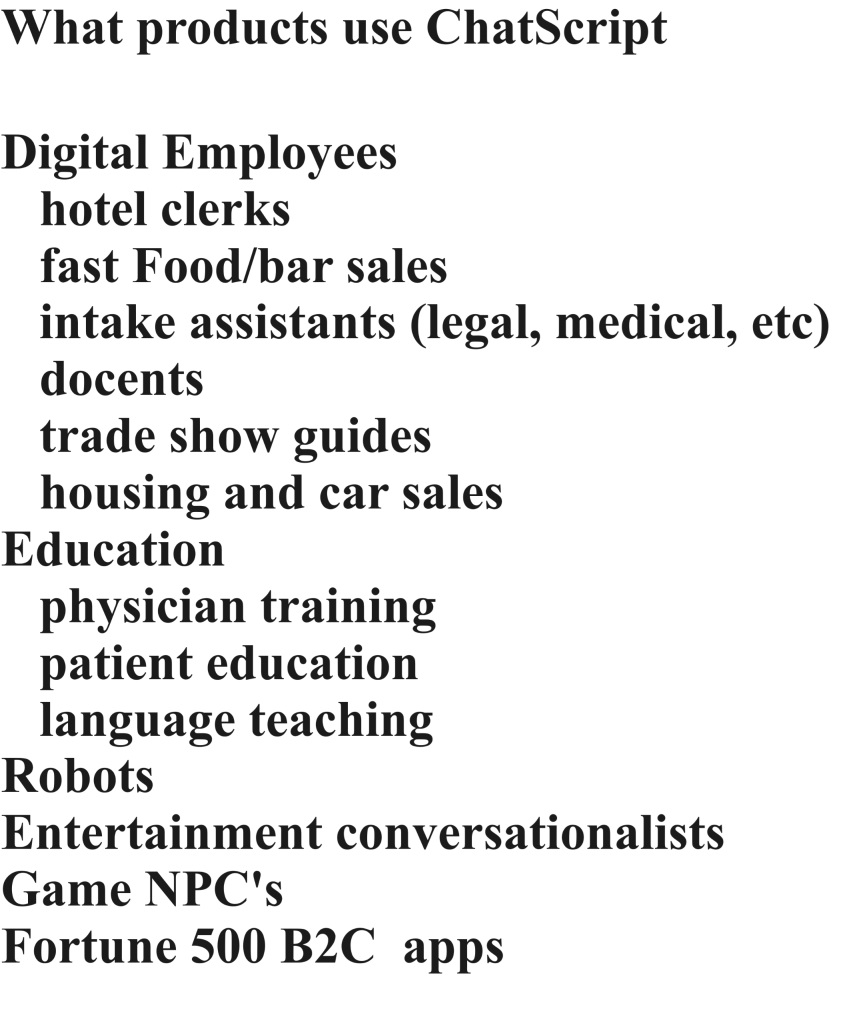

A: ChatScript is a general purpose natural language engine we developed, capable of being used in many ways. You only see it powering conversational characters where the goal is primarily entertainment. But the world is moving to a "conversational" user interface where you operate your appliances and computers via voice and not via mouse and keyboard.

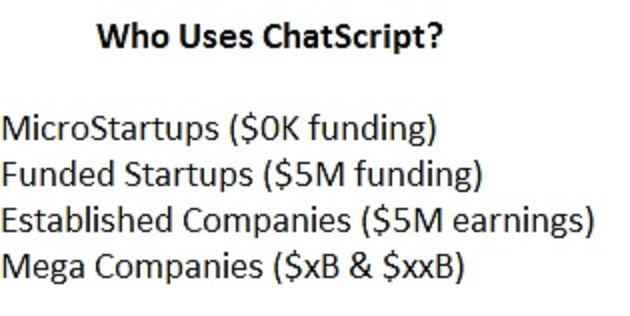

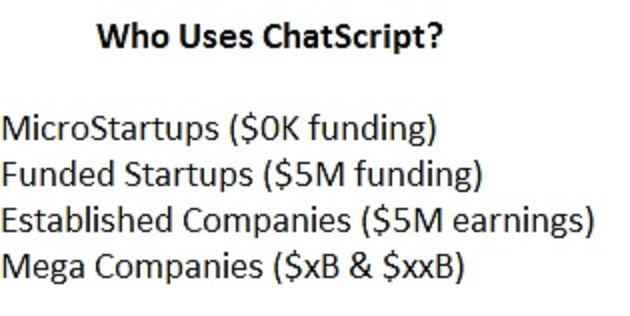

We've built chatbots that allow you to tell your phone to do things (set up meetings, play music of your choice, report on battery status, etc). Siri and Cortana and Alexa are just the tip of the iceberg. And they can't converse. ChatScript can. So our work is exploring the practical use of the conversational UI. Some of it we are doing ourselves. And some of it is from other developers using ChatScript. It is open source and hundreds of people and institutions around the world have copies of it. Several start-ups use it as core technology. There is even a mega-entertainment company that uses it.

For command and control applications (like telling your phone or a robot what to do), we work on balancing how much confirmation to give from the chatbot and when. And we find that even for tasks which seem robotic where you give a system and order and expect it to carry it out, people enjoy the "entertainment" aspect of the system having a personality. Would you rather just give orders to your phone or would you enjoy the concept that it is actually a genie trapped in your phone who can carry out your wishes, but due to the imprisonment in the phone has very limited powers and would like for you to find a way to help her escape?

People imbue human characteristics to their cars, to trees, to their pets. Is it ethical for us to assist them in doing that for gadgets by explicitly creating personalities and backstories you can chat about? Normally I don't see it as an ethical problem. All of entertainment is created for people to enjoy but you could argue it encourages passivity. My only real concern is if people would rather interact with our characters than with real people. But for many people they either don't have people to interact with or don't feel free to do so. So you can describe our conversational chatbot work as either a solution or a crutch. Not so with personalities tied to controlling the world around you. Then it's just an intelligent interface that makes things easier and sometimes comes with a touch of humor.

Aside from pure entertainment chatbots, ChatScript has been used for selling real estate in Chinese. We made a clone of the movie director Morgan Spurlock for his company website as a demo for his show Inside Man for the episode about AI and how it could be of use to automate his life. The University of Ohio made a virtual patient that doctors can use to practice their diagnostic interviewing skills on. It has a full life-sized avatar with gestures as well. ChatScript has been used in a demo to teach children how to manage their asthma in a rather unique way. Two chatbots - the big bad wolf who recently has asthma and is unable therefore to blow the pig's house down and the little pig who is quite brainy, chat about wolf's problems and have daily adventures in Fairy Tale land. While they chat they turn to the child user and ask him questions about his life with asthma. And the user can butt into the conversation at any time, directing comments and questions to either chatbot or both. It's like a stage play where the audience is encouraged to join in.

Several years we created a demo to improve Amazon search using natural language. It uses Amazon's external api to perform their search, but because the demo determines what the user actually wants, it takes the results Amazon gives and reorders them, moving truly matching items to the top and pure junk to the bottom of the page. And if there are no matches, it does a revised query to simplify things for Amazon. Despite several years of work in-house by A9 (Amazon search subsidiary) to handle natural language in search, they still haven't replicated what we demoed to them back on 2012.

Speech will replace keyboard for several reasons. First, for devices where keyboards are impractical. Computers keep shrinking or relocating to the cloud and there's no room for a keyboard. This includes Apple's iWatch, Google Glass, Amazon's Echo, any home appliance like a stove. Soon what looks like a hearing aid will probably be your cell phone. It can hear you talk, it can put sound in your ear, and maybe it can project a displayed image (obviously some day holographic). Sure, it could display a virtual reality keyboard that you can pretend to type at, but who would really want to use such a clunky old-tech interface? And for now the idea of a direct brain-wired neural interface creeps me out, no matter that it might be even more efficient

Second, people talk as their primary interface to communicate with other people. They can talk faster and more accurately than they can type. Why should they type to their computer when talking is easier? And why would I want to move my mouse to click into a folder, then double click on a document in that folder, then press Ctrl-A to select all text, then move my mouse to a menu on fonts and and select a font size. I could instead say "open the document I most recently worked on and change the font size of everything to 16". That would be so much faster than all those manual steps.

Natural language processing has many challenges left to solve. One is that speech recognition isn't accurate in noisy environments, though Amazon Echo does a good job with its far-field technology of picking out speech directed at it from the other side of the room over music it is playing. And child's voice and foreign accents degrade speech recognition. Another is simply that it takes time to script or machine learn what to do with a user message. We "could" build a chatbot that can do the document change verbally I mentioned earlier. But it takes time to do that. Amazon Echo brings out new things it can do all the time and Siri has evolved a lot since she first was launched. It just takes time to set all these things up.

And computers still don't understand meaning, really. And have no common sense knowledge (of which there are millions of facts) because computers don't live embodied in a world. So if you ask a computer (as was done in the Loebner competition in a previous year) "Would it hurt you if I stabbed you with a towel?" None of the chatbot contestants had an answer that made any sense or would have been prepared to back up an answer with a logical reason. A human might answer "If you stab me hard enough, sure." or "No, because towels are soft." But at least chatbots these days can handle "What’s bigger, a large tooth or a tiny mountain". Pronoun resolution is often hard. And answers that depend utterly on prior sentences.

It is popular to worry these days about AI creating sentient beings that will take over the world. Not that anyone anywhere is remotely close to developing sentience in a computer. All we really know how to do is create AI that has specific abilities. AI's can beat people in games and at Jeopardy. But I have no reason to believe that an AI with thousands of abilities is suddenly going to become sentient. But, on the off chance that will happen someday, maybe now is a good time to start treating your AI's with the same respect you give people. Many people coming to a chatbot site just want to insult it or have sex with it. I expect these people have trouble interacting with people in their daily life.

Currently it's all about illusion. Chatbots these days create the illusion that they understand meaning because they can pick up on patterns in a sentence and react appropriately. The tooth is small, the mountain is big, the user asked what is bigger. And it gives you the right answer, so obviously it understood you. But really it only recognized a simplified pattern. If you asked "Is a tooth bigger than a mountain at any time during the year?" it probably wouldn't understand the sentence and would give a spurious answer.

Chatbots are designed to create the illusion if you don't pick too hard at them. Most can't answer the simple question "why". So if you asked, "Where were you yesterday?" maybe the bot would answer "In San Francisco". If you then asked "why", it wouldn't answer. For some of our chatbots we put a lot of time into "why" answers. For a topic on recycling, the bot offers the gambit "How would you dispose of all the dead bodies constantly accumulating in the world?" which is an interesting and provocative question (we do try to keep the conversation interesting). If the user asks "why do you ask", the bot answers "It’s a big problem. 7 billion people means 70 million bodies a year." It’s not that the user asks why at every moment (though they can and they would learn more and risk sounding like a 3-year-old). It’s that when they do ask, their question is well answered. The illusion is not broken. And that's our goal. To maintain an illusion.

When does sufficient illusion become reality? I don't honestly know. People talking to each other constantly think the other person understands. Usually they do. Sometimes they don't. The classic example is the American talking to a foreigner, asking them several questions to which the foreigner answers "Yes.". It's only when the American asks "are you dead?" and the foreigner answers "Yes" that he confirms that "Yes" is the only thing the foreigner knows in English.

Illusion is Brillig's job. To create chatbots that successfully maintain an illusion much of the time. While being entertaining or useful.

About Us Technology External APIs Projects Testimonials ChatBot Demo Awards/Press Publications Contact